hadoop HA QJM实际操作

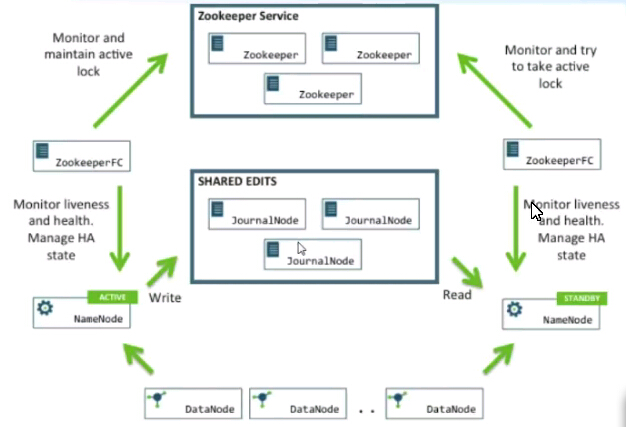

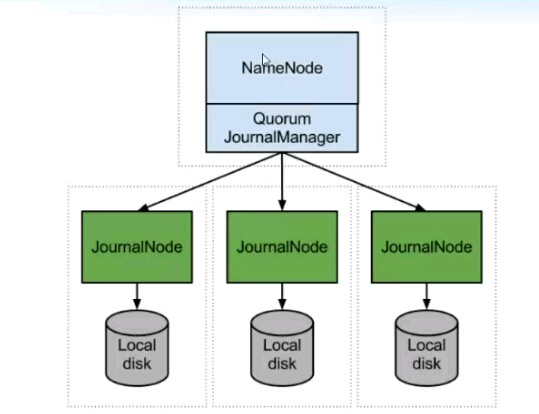

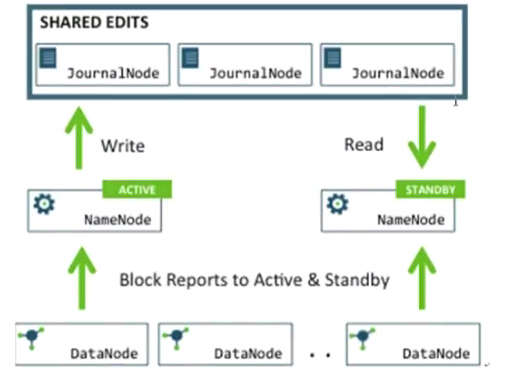

hadoop hdfs QJM方式实现HA

QJM方式实现的HA官方地址: http://hadoop.apache.org/docs/r2.5.2/hadoop-project-dist/hadoop-hdfs/HDFSHighAvailabilityWithQJM.html

Q-->Quorum 分布式系统中常用的,用来保证数据冗余和最终一致性的投票算法

J-->Journal 日志

M-->Manager

QJM基于Paxos算法实现的HDFS HA方案

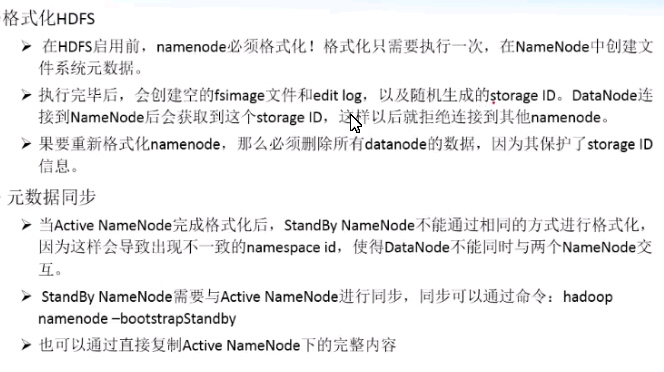

生产环境中在格式化namenode后一定要记住设置dfs.namenode.support.allow.format属性为false

生产环境中在格式化namenode后一定要记住设置dfs.namenode.support.allow.format属性为false

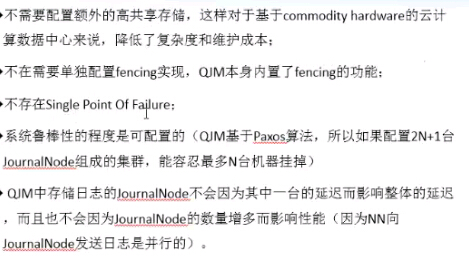

QJM优点:

QJM配置

- NameNode HA基本配置(core-site.xml,hdfs-site.xml)

- Active NameNode与Standby NameNode地址配置

- NameNode与DataNode本地路径配置

- HDFS Namespace访问配置

- 隔离fencing配置(配置两节点之间的互相SSH无密钥登录)

- QJM配置(hdfs-site.xml)

- QJM管理编辑日志

- 编辑日志存储目录

- 手动故障转移(无需配置)

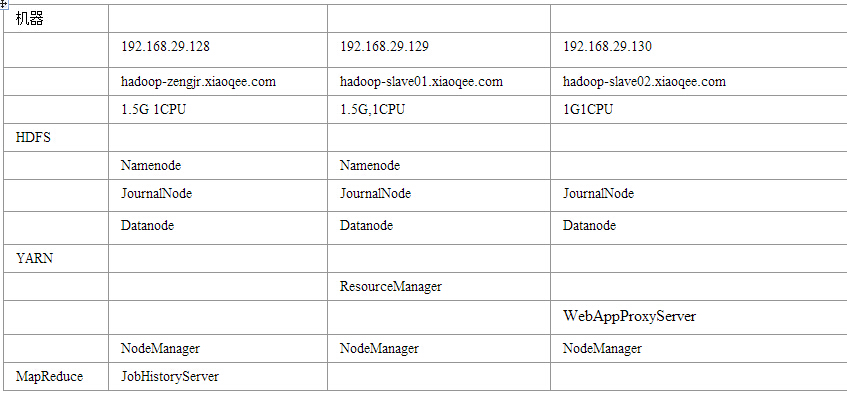

机器规划配置

QJM之core-site.xml配置

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-cluster</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/modules/hadoop-2.5.0/data/tmp</value>

</property>

</configuration>

QJM之hdfs-site.xml配置

<configuration>

<property>

<name>dfs.nameservices</name>

<value>hadoop-cluster</value>

</property>

<property>

<name>dfs.ha.namenodes.hadoop-cluster</name>

<value>nn128,nn129</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-cluster.nn128</name>

<value>hadoop-zengjr.xiaoqee.com:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hadoop-cluster.nn129</name>

<value>hadoop-slave01.xiaoqee.com:8020</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.hadoop-cluster.nn128</name>

<value>hadoop-zengjr.xiaoqee.com:8022</value>

</property>

<property>

<name>dfs.namenode.servicerpc-address.hadoop-cluster.nn129</name>

<value>hadoop-slave01.xiaoqee.com:8022</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-cluster.nn128</name>

<value>hadoop-zengjr.xiaoqee.com:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.hadoop-cluster.nn129</name>

<value>hadoop-slave01.xiaoqee.com:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop-zengjr.xiaoqee.com:8485;hadoop-slave01.xiaoqee.com:8485;hadoop-slave02.xiaoqee.com:8485/hadoop-cluster</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.hadoop-cluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/zengjr/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/modules/hadoop-2.5.0/data/dfs/jn</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///opt/modules/hadoop-2.5.0/data/dfs/nn/name</value>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>file:///opt/modules/hadoop-2.5.0/data/dfs/nn/edits</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///opt/modules/hadoop-2.5.0/data/dfs/nn/dn</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

</configuration>

QJM之slaves配置

hadoop-zengjr.xiaoqee.com

hadoop-slave02.xiaoqee.com

hadoop-slave01.xiaoqee.com

QJM之配置发送

scp -r etc/hadoop/ zengjr@hadoop-slave01.xiaoqee.com:/opt/modules/hadoop-2.5.0/etc/

scp -r etc/hadoop/ zengjr@hadoop-slave02.xiaoqee.com:/opt/modules/hadoop-2.5.0/etc/

注意这里要设置机器之间的无密钥登录,相关设置请看前面相关博文

QJM 集群启动

在各个JournalNode节点上启动journalnode服务

sbin/hadoop-daemon.sh start journalnode在[nn1]上对其进行格式化,并启动

bin/hdfs namenode -format sbin/hadoop-daemon.sh start namenode[nn2]上,同步nn1的元数据信息

bin/hdfs namenode -bootstrapStandby启动[nn2]:

sbin/hadoop-daemon.sh start namenode将[nn1]切换为Active

bin/hdfs haadmin -transitionToActive nn128在[nn1]上启动所有datanode

sbin/hadoop-daemon.sh start datanode

检查启动

第一步检查:

[zengjr@hadoop-zengjr hadoop-2.5.0]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/modules/hadoop-2.5.0/logs/hadoop-zengjr-journalnode-hadoop-zengjr.xiaoqee.com.out

[zengjr@hadoop-zengjr hadoop-2.5.0]$ jps

5557 JournalNode

5603 Jps

第二步检查:

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs namenode -format

14/12/31 21:35:40 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop-zengjr.xiaoqee.com/192.168.29.128

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.5.0

STARTUP_MSG: classpath = /opt/modules/hadoop-2.5.0/etc/hadoop:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hadoop-auth-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hadoop-annotations-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-net-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/avro-1.7.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-nfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-common-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-api-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-client-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.5.0.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = http://svn.apache.org/repos/asf/hadoop/common -r 1616291; compiled by 'jenkins' on 2014-08-06T17:31Z

STARTUP_MSG: java = 1.7.0_67

************************************************************/

14/12/31 21:35:40 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

14/12/31 21:35:40 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-a5698cd1-64c9-463a-8737-7c7bb8f8c6cc

14/12/31 21:35:41 INFO namenode.FSNamesystem: fsLock is fair:true

14/12/31 21:35:42 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/12/31 21:35:42 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

14/12/31 21:35:42 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

14/12/31 21:35:42 INFO blockmanagement.BlockManager: The block deletion will start around 2014 Dec 31 21:35:42

14/12/31 21:35:42 INFO util.GSet: Computing capacity for map BlocksMap

14/12/31 21:35:42 INFO util.GSet: VM type = 64-bit

14/12/31 21:35:42 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

14/12/31 21:35:42 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/12/31 21:35:42 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/12/31 21:35:42 INFO blockmanagement.BlockManager: defaultReplication = 3

14/12/31 21:35:42 INFO blockmanagement.BlockManager: maxReplication = 512

14/12/31 21:35:42 INFO blockmanagement.BlockManager: minReplication = 1

14/12/31 21:35:42 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/12/31 21:35:42 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/12/31 21:35:42 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/12/31 21:35:42 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/12/31 21:35:42 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

14/12/31 21:35:42 INFO namenode.FSNamesystem: fsOwner = zengjr (auth:SIMPLE)

14/12/31 21:35:42 INFO namenode.FSNamesystem: supergroup = supergroup

14/12/31 21:35:42 INFO namenode.FSNamesystem: isPermissionEnabled = false

14/12/31 21:35:42 INFO namenode.FSNamesystem: Determined nameservice ID: hadoop-cluster

14/12/31 21:35:42 INFO namenode.FSNamesystem: HA Enabled: true

14/12/31 21:35:42 INFO namenode.FSNamesystem: Append Enabled: true

14/12/31 21:35:42 INFO util.GSet: Computing capacity for map INodeMap

14/12/31 21:35:42 INFO util.GSet: VM type = 64-bit

14/12/31 21:35:42 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

14/12/31 21:35:42 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/12/31 21:35:42 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/12/31 21:35:42 INFO util.GSet: Computing capacity for map cachedBlocks

14/12/31 21:35:42 INFO util.GSet: VM type = 64-bit

14/12/31 21:35:42 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

14/12/31 21:35:42 INFO util.GSet: capacity = 2^18 = 262144 entries

14/12/31 21:35:42 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/12/31 21:35:42 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/12/31 21:35:42 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/12/31 21:35:42 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/12/31 21:35:42 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/12/31 21:35:42 INFO util.GSet: Computing capacity for map NameNodeRetryCache

14/12/31 21:35:42 INFO util.GSet: VM type = 64-bit

14/12/31 21:35:42 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

14/12/31 21:35:42 INFO util.GSet: capacity = 2^15 = 32768 entries

14/12/31 21:35:42 INFO namenode.NNConf: ACLs enabled? false

14/12/31 21:35:42 INFO namenode.NNConf: XAttrs enabled? true

14/12/31 21:35:42 INFO namenode.NNConf: Maximum size of an xattr: 16384

14/12/31 21:35:43 WARN ssl.FileBasedKeyStoresFactory: The property 'ssl.client.truststore.location' has not been set, no TrustStore will be loaded

14/12/31 21:35:44 INFO namenode.FSImage: Allocated new BlockPoolId: BP-186794310-192.168.29.128-1420079744554

14/12/31 21:35:44 INFO common.Storage: Storage directory /opt/modules/hadoop-2.5.0/data/dfs/nn/name has been successfully formatted.

14/12/31 21:35:44 INFO common.Storage: Storage directory /opt/modules/hadoop-2.5.0/data/dfs/nn/edits has been successfully formatted.

14/12/31 21:35:45 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/12/31 21:35:45 INFO util.ExitUtil: Exiting with status 0

14/12/31 21:35:45 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-zengjr.xiaoqee.com/192.168.29.128

************************************************************/

[zengjr@hadoop-zengjr hadoop-2.5.0]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /opt/modules/hadoop-2.5.0/logs/hadoop-zengjr-namenode-hadoop-zengjr.xiaoqee.com.out

[zengjr@hadoop-zengjr hadoop-2.5.0]$ jps

5753 Jps

5557 JournalNode

5670 NameNode

第三,四步检查:

[zengjr@hadoop-slave01 hadoop-2.5.0]$ bin/hdfs namenode -bootstrapStandby

14/12/31 21:36:24 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop-slave01.xiaoqee.com/192.168.29.129

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.5.0

STARTUP_MSG: classpath = /opt/modules/hadoop-2.5.0/etc/hadoop:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hadoop-auth-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/hadoop-annotations-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/httpcore-4.2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsch-0.1.42.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-net-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/avro-1.7.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/lib/httpclient-4.2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-nfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-common-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/common/hadoop-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-el-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-nfs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/hdfs/hadoop-hdfs-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-collections-3.2.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jline-0.9.94.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/activation-1.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-api-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-tests-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-common-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-client-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/hadoop-annotations-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0-tests.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.5.0.jar:/opt/modules/hadoop-2.5.0/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.5.0.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = http://svn.apache.org/repos/asf/hadoop/common -r 1616291; compiled by 'jenkins' on 2014-08-06T17:31Z

STARTUP_MSG: java = 1.7.0_67

************************************************************/

14/12/31 21:36:24 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

14/12/31 21:36:24 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

=====================================================

About to bootstrap Standby ID nn129 from:

Nameservice ID: hadoop-cluster

Other Namenode ID: nn128

Other NN's HTTP address: http://hadoop-zengjr.xiaoqee.com:50070

Other NN's IPC address: hadoop-zengjr.xiaoqee.com/192.168.29.128:8022

Namespace ID: 148756489

Block pool ID: BP-186794310-192.168.29.128-1420079744554

Cluster ID: CID-a5698cd1-64c9-463a-8737-7c7bb8f8c6cc

Layout version: -57

=====================================================

14/12/31 21:36:27 INFO common.Storage: Storage directory /opt/modules/hadoop-2.5.0/data/dfs/nn/name has been successfully formatted.

14/12/31 21:36:27 INFO common.Storage: Storage directory /opt/modules/hadoop-2.5.0/data/dfs/nn/edits has been successfully formatted.

14/12/31 21:36:28 WARN ssl.FileBasedKeyStoresFactory: The property 'ssl.client.truststore.location' has not been set, no TrustStore will be loaded

14/12/31 21:36:28 WARN ssl.FileBasedKeyStoresFactory: The property 'ssl.client.truststore.location' has not been set, no TrustStore will be loaded

14/12/31 21:36:28 INFO namenode.TransferFsImage: Opening connection to http://hadoop-zengjr.xiaoqee.com:50070/imagetransfer?getimage=1&txid=0&storageInfo=-57:148756489:0:CID-a5698cd1-64c9-463a-8737-7c7bb8f8c6cc

14/12/31 21:36:28 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

14/12/31 21:36:29 INFO namenode.TransferFsImage: Transfer took 0.02s at 0.00 KB/s

14/12/31 21:36:29 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 352 bytes.

14/12/31 21:36:29 INFO util.ExitUtil: Exiting with status 0

14/12/31 21:36:29 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-slave01.xiaoqee.com/192.168.29.129

************************************************************/

[zengjr@hadoop-slave01 hadoop-2.5.0]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /opt/modules/hadoop-2.5.0/logs/hadoop-zengjr-namenode-hadoop-slave01.xiaoqee.com.out

[zengjr@hadoop-slave01 hadoop-2.5.0]$ jps

5137 NameNode

5220 Jps

5031 JournalNode

第五步没有进行的时候,两个namenode都是standby,进行第五步后就nn128就变成active

测试集群

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs dfs -ls /

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs dfs -mkdir -p /user/zengjr/tmp/conf

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs dfs -put etc/hadoop/*.xml /user/zengjr/tmp/conf

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs dfs -ls -R /

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user/zengjr

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user/zengjr/tmp

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:58 /user/zengjr/tmp/conf

-rw-r--r-- 3 zengjr supergroup 3589 2014-12-31 21:58 /user/zengjr/tmp/conf/capacity-scheduler.xml

-rw-r--r-- 3 zengjr supergroup 1008 2014-12-31 21:58 /user/zengjr/tmp/conf/core-site.xml

-rw-r--r-- 3 zengjr supergroup 9201 2014-12-31 21:58 /user/zengjr/tmp/conf/hadoop-policy.xml

-rw-r--r-- 3 zengjr supergroup 4217 2014-12-31 21:58 /user/zengjr/tmp/conf/hdfs-site.xml

-rw-r--r-- 3 zengjr supergroup 620 2014-12-31 21:58 /user/zengjr/tmp/conf/httpfs-site.xml

-rw-r--r-- 3 zengjr supergroup 1235 2014-12-31 21:58 /user/zengjr/tmp/conf/mapred-site.xml

-rw-r--r-- 3 zengjr supergroup 1215 2014-12-31 21:58 /user/zengjr/tmp/conf/yarn-site.xml

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs haadmin -transitionToStandby nn128

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs haadmin -transitionToActive nn129

[zengjr@hadoop-zengjr hadoop-2.5.0]$ bin/hdfs dfs -ls -R /

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user/zengjr

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:57 /user/zengjr/tmp

drwxr-xr-x - zengjr supergroup 0 2014-12-31 21:58 /user/zengjr/tmp/conf

-rw-r--r-- 3 zengjr supergroup 3589 2014-12-31 21:58 /user/zengjr/tmp/conf/capacity-scheduler.xml

-rw-r--r-- 3 zengjr supergroup 1008 2014-12-31 21:58 /user/zengjr/tmp/conf/core-site.xml

-rw-r--r-- 3 zengjr supergroup 9201 2014-12-31 21:58 /user/zengjr/tmp/conf/hadoop-policy.xml

-rw-r--r-- 3 zengjr supergroup 4217 2014-12-31 21:58 /user/zengjr/tmp/conf/hdfs-site.xml

-rw-r--r-- 3 zengjr supergroup 620 2014-12-31 21:58 /user/zengjr/tmp/conf/httpfs-site.xml

-rw-r--r-- 3 zengjr supergroup 1235 2014-12-31 21:58 /user/zengjr/tmp/conf/mapred-site.xml

-rw-r--r-- 3 zengjr supergroup 1215 2014-12-31 21:58 /user/zengjr/tmp/conf/yarn-site.xml

QJM自动故障转移

启动zookeeper服务,停止namenode,datanode,journalnode服务

[zengjr@hadoop-slave01 zookeeper]$ ./bin/zkServer.sh start JMX enabled by default Using config: /opt/modules/zookeeper/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [zengjr@hadoop-slave01 zookeeper]$ cd ../hadoop-2.5.0 [zengjr@hadoop-slave01 hadoop-2.5.0]$ sbin/hadoop-daemon.sh stop datanode stopping datanode [zengjr@hadoop-slave01 hadoop-2.5.0]$ sbin/hadoop-daemon.sh stop journalnode stopping journalnode [zengjr@hadoop-slave01 hadoop-2.5.0]$ sbin/hadoop-daemon.sh stop namenode stopping namenode [zengjr@hadoop-slave01 hadoop-2.5.0]$ jps 2491 QuorumPeerMain 2635 Jpshdfs-site.xml添加配置

<property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property>core-site.xml添加配置

<property> <name>ha.zookeeper.quorum</name> <value>hadoop-zengjr.xiaoqee.com:2181,hadoop-slave02.xiaoqee.com:2181,hadoop-slave01.xiaoqee.com:2181</value> </property> #同步配置到其它机器上 scp -r etc/hadoop/ zengjr@hadoop-slave01.xiaoqee.com:/opt/modules/hadoop-2.5.0/etc/ scp -r etc/hadoop/ zengjr@hadoop-slave02.xiaoqee.com:/opt/modules/hadoop-2.5.0/etc/初始化HA在Zookeeper状态

bin/hdfs zkfc -formatZK可以在zookeeper下查看到到下面状态

WatchedEvent state:SyncConnected type:None path:null [zk: localhost:2181(CONNECTED) 0] ls / [zookeeper] [zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] [zk: localhost:2181(CONNECTED) 2] ls / [hadoop-ha, zookeeper] [zk: localhost:2181(CONNECTED) 3] ls /hadoop-ha [hadoop-cluster] [zk: localhost:2181(CONNECTED) 4] ls /hadoop-ha/hadoop-cluster []启动journalnode(128,129,130上都要启动)

sbin/hadoop-daemon.sh start journalnode启动namenode(128,129上都要启动)

sbin/hadoop-daemon.sh start namenode启动datanode(128,129,130上都要启动)

sbin/hadoop-daemon.sh start datanode启动zkfc(128,129上都要启动)

sbin/hadoop-daemon.sh start zkfc #可以在zookeeper下查看到到下面状态 [zk: localhost:2181(CONNECTED) 4] ls /hadoop-ha/hadoop-cluster [ActiveBreadCrumb, ActiveStandbyElectorLock]测试,杀死namenode进程,kill -9 进程ID,然后可以看到两个节点standy,active会自动变换